Meta has announced the suspension of teenagers’ access to existing AI characters across all its applications worldwide, a move that technology experts describe as reflecting a major shift towards protecting minors in the digital age.

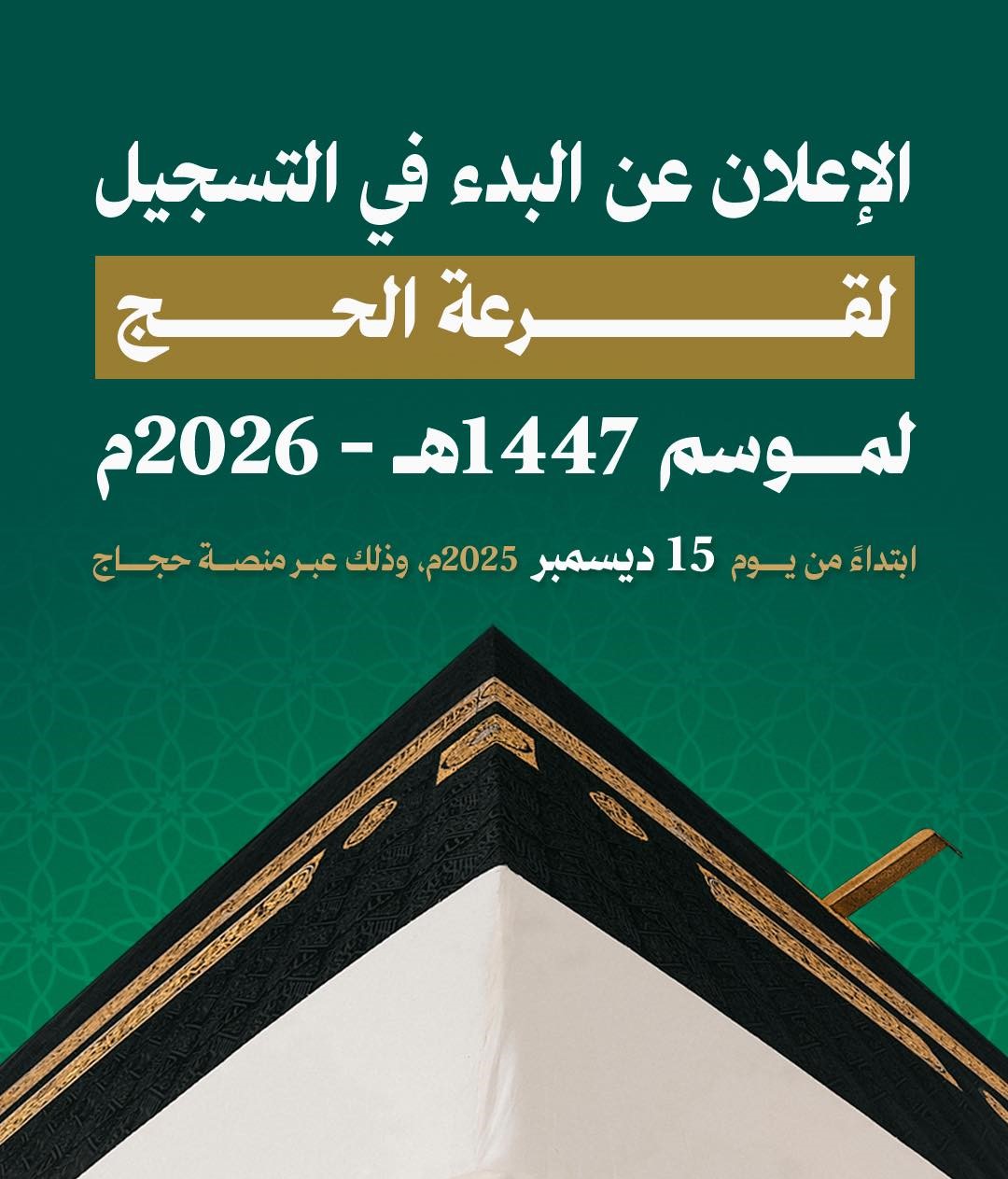

The company stated in an updated post on its blog dedicated to child safety that “starting in the coming weeks, teenage boys will not be able to access AI characters through our apps until the updated experience is ready.” It explained that the new version will introduce advanced parental control tools, allowing parents to monitor their children’s digital experience and ensure their safety while interacting with intelligent characters.

This version, tailored for teenagers, comes equipped with the movie rating system known as PG-13, to prevent access to inappropriate content and encourage safer use of technology.

In October, Meta had introduced parental control tools allowing parents to disable teenagers’ conversations with AI characters, following a wave of sharp criticism directed at the company due to chatbot behavior and its impact on teenagers’ mental health, especially girls. However, these controls have not yet been launched and will become an essential part of the updated experience.

This step confirms that major technology companies have begun to move quickly to confront potential digital risks to minors, offering innovative solutions that combine entertainment and learning while ensuring digital safety.

Globally, concerns are increasing about the impact of social media platforms and AI applications on teenagers’ mental health. Studies have shown that exposure to inappropriate or excessive content can lead to sleep and mood disorders, as well as increased anxiety and depression.

The PG-13 rating system, which Meta will adopt, is designed to identify the appropriate age group for viewing content and has become a fundamental standard for regulating what reaches teenagers on multiple digital platforms, making the company’s new move an advanced step in the field of digital safety.